In my previous blog post, I have shown how important reliability is for OneLogin and how we measure it.

In this small series of “Designing OneLogin for Scale and Reliability” blog posts, I am going to show how we designed OneLogin to achieve a highly reliable and highly scalable product.

OneLogin started about ten years ago and over the course of startup-like growth, it built a lot of historical baggage, not well-designed (or not designed at all) components, many bottlenecks, “legacy” infrastructure, … all the normal things that happen when a company grows, hiring accelerates and controls are lacking.

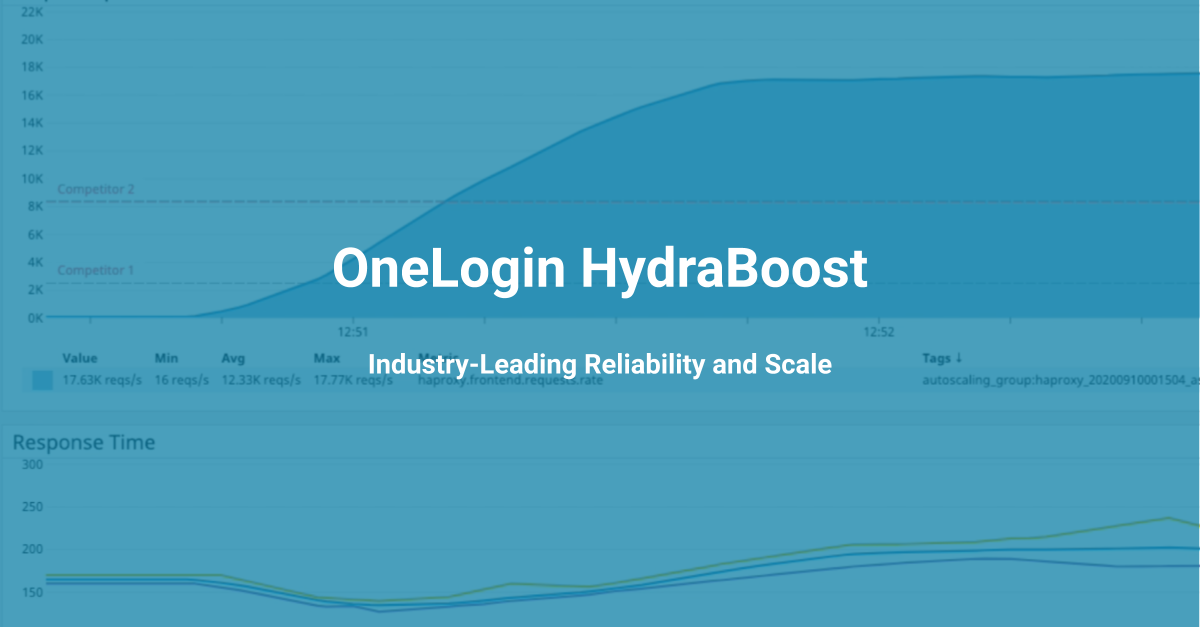

About 1.5 years ago, with the launch of our “Hydra” initiative we focused on reliability and scalability, we also launched our major “Hydra Cloud Infrastructure” redesign projects.

Goals

Good product design or redesign starts with goals. Goals are important so you know what your new design must meet and, maybe even more importantly, what it does not need, to avoid over-engineering the solution.

Here were our goals:

- Achieve 99.999% (five nines) consistent Reliability for our end users

- Scale to 100x of current “normal” throughput for all major authentication flows

Notes:

See the previous blog post on how we define and measure reliability.

In OneLogin, we process thousands of requests per second.

Key Principles and Constraints

Before we started designing our OneLogin Hydra Cloud Infrastructure, we defined several key principles of the new architecture as well as constraints within which we need to operate.

Security first – Designing software systems consists of many tradeoffs. We are not willing to sacrifice security.

Reliability is a key feature – High reliability is not just one of the non-functional requirements, but a key feature and differentiator of our platform.

Platform over product – IdaaS is historically perceived as a product or service, but we are eventually building a high scale Identity Platform.

No single point of failure – There must not be a single point of failure (or component) at any layer of our architecture on the path of any end user flow.

Master/Replicas DB storage – Postgres is our primary DB store, and it is unrealistic to replace it with something else. We want to embrace its constraints like a Postgres master-slave model.

Immutable Infrastructure as a code – All infrastructure is fully described in code and stored in Git, all processes are automated – no manual operator changes (aka Click-Ops), no pets. All deployed artifacts (servers, services, …) are immutable.

Key Insights

Modern site reliability and scalability technology doesn’t provide a one-size-fits-all solution. Different types of services and systems will have different approaches. The key is to find and embrace the facet or facets that make your problem approachable.

The following are key insights from our experience operating OneLogin that served as key factors driving decisions, tradeoffs and eventual design of the OneLogin Hydra Cloud Infrastructure.

Our tenants are not interconnected

Our tenants (customer entities) are not interconnected. They do not share data or interact with each other.

The product is designed as a multi-tenant to allow more efficient use of underlying resources, but we are free to separate tenants to achieve higher scale, independence, security, different compliance requirements or lower latency.

Administration vs end user operations are vastly different and essentially independent

There are three main groups of operations:

- End user actions – mostly users authenticating to sign in third-party applications

- Administrative tasks – mostly consisting of configuring the product

- Batch and job processing – as a result of administrative actions or scheduled events like user synchronizations, huge amounts of compute and/or time intensive operations need to be performed in the background

These three types of operations have different levels of SLA, criticality, scale requirements, audience, etc.

End user login is our most critical function and is essentially a read-only operation

The primary function of OneLogin is end user login:

End user login is any requests to OneLogin on behalf of an end user attempting to access OneLogin, authenticate to OneLogin, or authenticate to or access an app via OneLogin, whether via the OneLogin UX, supported protocol, or API

End user login is in most cases a read-only operation that reads (a subset of) data, but rarely needs to write them.

Complementary end user login operations need to happen quickly, but not necessary immediately

End user login contains many complementary operations that are necessary for a fully functioning service. These operations need to happen quickly, but not necessarily immediately and/or synchronously.

A Few Words About our Deployment

Before I jump on the next section, it is good to provide a little bit of context on our operations.

OneLogin is designed as a multi-tenant product, it is deployed on Amazon Web Services (AWS) cloud computing platform and is divided into shards. Each shard is hosting tens of thousands of customers. A shard is the highest level of isolation – a completely independent, isolated deployment with its own independent data storage.

Currently, we have two shards: eu shard, us shard and in process of building a government shard for FedRamp usage.

Each shard is deployed in multiple active AWS regions (no single point of failure).

In each region all services of the platform are evenly spread over multiple availability zones.

Figure – Shards and regions

OK, that was a short sidetrack, now let’s jump back on the main topic.

Login vs Admin Operations

When we put together the Principles, Goals and Key Insights, it started to be obvious that we deal with two main tiers that have different audiences with different objectives and requirements to reliability, scale and usage. It seemed possible to design these two tiers operationally independent of each other.

We started calling them “Login” and “Admin”:

- Login is processing end user sign-in to third-party applications

- Admin serves mostly administrators to configure OneLogin accounts or to run background synchronization jobs with accounts directories and third party applications

To summarize the key differences:

Login

- Audience: end users

- High scale, low latency, expected immediate response

- Reliability is critical

- Mostly read-only operations – once the flows are configured, the logins are mostly read operations with some side effects

Admin

- Audience: administrators

- Much smaller synchronous traffic (administrators configuring the product)

- Very high number of asynchronous jobs (directory synchronizations and third party sw provisioning)

- Heavy on write operations

This was our key design paradigm that would allow us to achieve the level of scale and reliability we needed.

Given our master/slave DB topology, it would be extremely hard (or impossible) to achieve the five nines of reliability for write operations or their super-high scale. But, when we completely separate Login operations, (realizing that they are mostly read-only) we could assign them an “unlimited” number of read-only replicas (or any other backend resources) and achieve extremely high reliability and scale.

The Admin part still relies on some of the crucial limited resources (like the master DB). But that part does not need such extreme reliability requirements. And the operations can be in large made asynchronous, which makes them much easier to scale to the level we needed.

We decided that the goal of five nines reliability (and our primary focus) will be applied towards the Login tier as this is the most critical functionality. Admin tier will still need decent reliability, but our main focus in that area is on scaling it enough to keep up with all the login and job operations.

With that, we started designing our clusters.

Clusters

A cluster is a bundled set of stateless (micro-)services with dedicated functionality. Currently, we have two types of clusters:

- Login clusters – processing end user flows

- Admin clusters – for admin tasks and job processing

Admin Cluster and Primary Region

The Admin/Login Clusters largely follow the master/slave DB architecture.

At any time, exactly one region in each shard is primary. The other region(s) are secondary.

The primary region contains an active admin cluster and the master (read-write) Postgres database.

The admin cluster is where (the majority of) write operations are being processed – administrative tasks, events and batch/job processing – and operations results stored to the master database.

Login Clusters and Secondary Regions

Now we had all the info to start designing the Login clusters!

Any region that is not primary is called a secondary region. Secondary regions contain only read-only database replicas (not the master database).

End user flows are routed to and processed in Login clusters; we use a set of rules in our edge proxies that decide between Login and Admin tier.

Login clusters are deployed in both primary and secondary regions, which allows us better scale, reliability and also to move end user operations closer to users. Login clusters are completely decoupled and isolated from admin clusters (even in the primary region reading from their own replicas) to ensure that disruptions at the admin cluster can never impact end users.

Figure – routing from edge proxy to cluster

Login Clusters: Reliability

Login clusters have both regional and cross-regional active-active redundancy:

- Each region contains two login clusters. Both login clusters within the region are active and under normal conditions take 50/50 percent traffic split in the region.

- All regions (including the primary region) contain login clusters, that take traffic from the closest end users (we use latency based routing to closest region)

Figure – 50/50% traffic split between login clusters

This design allows us to redirect a percentage of traffic or execute full failover out of the unhealthy cluster (within region) or out of the whole region.

Cluster Failover

The edge proxy registers a service-specific backends for each service running in the cluster. When any of the services in one login cluster are detected as unhealthy, traffic is fully switched to a healthy backend in the second login cluster within the region.

The cluster failover can be also executed – typically as an explicit operator action – for the whole login cluster, resulting in sending all user traffic in a given region to only one of the available login clusters.

Checks are in place to pre-scale the target cluster to current capacity to avoid overload of the target cluster prior to full failover.

Figure – failover of unhealthy login cluster

Region Failover

The same partial or full failover can be performed on the whole region!

The full region failover is typically performed as incident mitigation or restorative action. During region failover, the target region capacity is first checked and scaled up to handle the added load. Then the new requests are routed to the failover region.

We use AWS Global Accelerator and AWS Global network to route users’ requests to the closest region. The Global Accelerator allows us to shape the traffic and redirect the percentage or all of the traffic out of the unhealthy region.

Figure – region failover with pre-capacity match

Writing Data From a Login Cluster

Each login cluster is connected to three read-only database replicas – all login services are able to operate without direct access to the read/write (master) database. Most of the operations during end login flows are read-only, but not everything. There are several ways that write operations can be executed from login cluster:

-

- An operation is put in queue and sent via message broker to the admin cluster, where it is processed by a job worker and stored in the master DB. The DB replication (from master DB to replicas) then distributes the update back to replica DB’s in all regions (and clusters).

Figure – processing write operations asynchronously

-

- In some cases (for subset of high-scale processing and for non-relational data), we leverage AWS DynamoDB with global tables. The operation is written to DynamoDB in the secondary region and then global tables distribute it to all other regions.

- For a subset of operations only region-local stores are necessary. In that case (usually), AWS Elasticache is leveraged.

Figure – using DynamoDB global tables and (regional) Elasticache

- In certain exceptional cases, a synchronous write action is needed (for example just-in-time user creation during the login). In that case the call is routed as if it was performed from outside – not “east-west,” but as a “north-south” call – leveraging all routing layers of our infrastructure.

Figure – performing cross-cluster synchronous operation

Login Clusters: Horizontal Scalability

Scalability is the property of a system to handle a growing amount of work by adding resources to the system. A system is considered scalable when it doesn’t need to be redesigned to maintain effective performance during or after a steep increase in workload.

There are two strategies to achieve scalability:

- Vertical Scalability (or Scaling Up) is about making a component bigger or faster so that it can handle more load. Typically adding CPU or memory to a single machine.

- Horizontal scalability (or Scaling Out) addresses the problem by adding more components to the system to spread out a load. In other words, add more “machines” to the pool of resources.

Login Clusters (and our whole OneLogin Hydra Infrastructure) are designed for full Horizontal Scalability. It takes the concept and pushes it even further by scaling out at multiple architectural levels:

- Scale-out nodes resources in a cluster – This automatically adds (or removes) various resources – like EC2 instances, services, proxies, databases in the cluster.

Scale-out whole login clusters – Thanks to our login clusters design, clusters are completely independent, yet connected to the whole system. So, we can leverage the Login clusters design and scale-out on the level of whole clusters. The scaled-out cluster can be deployed in the same or in a completely different (new) region.

This additional scale-out cluster design also deals with potential limitations of scaling-out to thousands of nodes in one cluster. Additionally, this provides another level of reliability and resiliency as each of these Login clusters can take load of any other or we can redistribute traffic between them.

Combined with our multi-tenant design, we can efficiently provide “unlimited” resources from any of the login clusters to any tenant.

While the above described system already allows for a massive, efficient and highly maintainable IDaaS platform, we can also leverage scaling-out at the top-level sharding isolation. As each shard is a completely independent and isolated entity, by adding each new shard, we can theoretically scale-out to any number of tenants.

Cluster Architecture

Cluster is implemented as a standalone Kubernetes (AWS EKS) cluster.

Its architecture, design and deployment is a complex topic, worth covering more deeply in one of our following blog posts.

Summary

There are multiple ways to design systems for scale and reliability and (as always) there is no single bullet.

Looking into how our product is used, separating key audiences and deciding what we need to focus on and (maybe even more importantly) what not, made us realize the key concepts of our future solution.

Writing the concepts down and then using them to guide each design decision on the path made us build a scalable and reliable product which we are very proud of (see our path to reliability).

Look at how your product is used and find your own Key Principles, Constraints and Insights. I hope this blog will help you on that path!

Check out Part 2 of our series on Designing OneLogin for Scalability and Reliability!